log4j.logger.org.apache.spark.scheduler.cluster.YarnClientSchedulerBackend=DEBUG YarnClientSchedulerBackend — SchedulerBackend for YARN in Client Deploy Mode

YarnClientSchedulerBackend is the YarnSchedulerBackend used when a Spark application is submitted to a YARN cluster in client deploy mode.

|

Note

|

client deploy mode is the default deploy mode of Spark applications submitted to a YARN cluster.

|

YarnClientSchedulerBackend submits a Spark application when started and waits for the Spark application until it finishes (successfully or not).

| Name | Initial Value | Description |

|---|---|---|

(undefined) |

Client to submit and monitor a Spark application (when Created when |

|

(undefined) |

|

Tip

|

Enable Add the following line to Refer to Logging. |

|

Tip

|

Enable Add the following line to Refer to Logging. Use with caution though as there will be a flood of messages in the logs every second. |

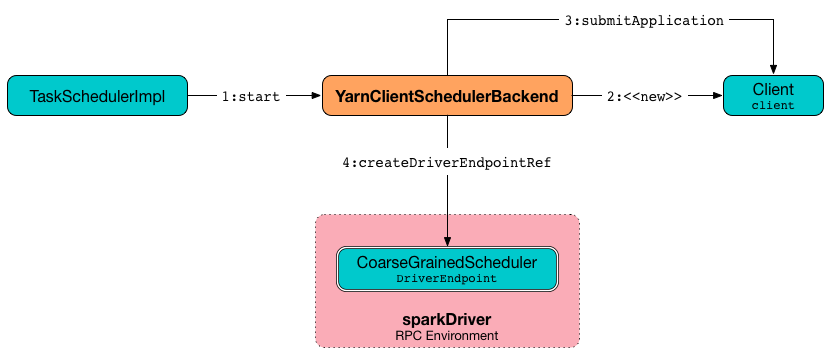

Starting YarnClientSchedulerBackend — start Method

start(): Unit|

Note

|

start is a part of SchedulerBackend contract executed when TaskSchedulerImpl starts.

|

start creates Client (to communicate with YARN ResourceManager) and submits a Spark application to a YARN cluster.

After the application is launched, start starts a MonitorThread state monitor thread. In the meantime it also calls the supertype’s start.

Internally, start takes spark.driver.host and spark.driver.port properties for the driver’s host and port, respectively.

If web UI is enabled, start sets spark.driver.appUIAddress as webUrl.

You should see the following DEBUG message in the logs:

DEBUG YarnClientSchedulerBackend: ClientArguments called with: --arg [hostport]|

Note

|

hostport is spark.driver.host and spark.driver.port properties separated by :, e.g. 192.168.99.1:64905.

|

start creates a ClientArguments (passing in a two-element array with --arg and hostport).

start sets the total expected number of executors to the initial number of executors.

|

Caution

|

FIXME Why is this part of subtypes since they both set it to the same value? |

start creates a Client (with the ClientArguments and SparkConf).

start submits the Spark application to YARN (through Client) and saves ApplicationId (with undefined ApplicationAttemptId).

start starts YarnSchedulerBackend (that in turn starts the top-level CoarseGrainedSchedulerBackend).

|

Caution

|

FIXME Would be very nice to know why start does so in a NOTE.

|

(only when spark.yarn.credentials.file is defined) start starts ConfigurableCredentialManager.

|

Caution

|

FIXME Why? Include a NOTE to make things easier. |

start creates and starts monitorThread (to monitor the Spark application and stop the current SparkContext when it stops).

stop

stop is part of the SchedulerBackend Contract.

It stops the internal helper objects, i.e. monitorThread and client as well as "announces" the stop to other services through Client.reportLauncherState. In the meantime it also calls the supertype’s stop.

stop makes sure that the internal client has already been created (i.e. it is not null), but not necessarily started.

stop stops the internal monitorThread using MonitorThread.stopMonitor method.

It then "announces" the stop using Client.reportLauncherState(SparkAppHandle.State.FINISHED).

Later, it passes the call on to the suppertype’s stop and, once the supertype’s stop has finished, it calls YarnSparkHadoopUtil.stopExecutorDelegationTokenRenewer followed by stopping the internal client.

Eventually, when all went fine, you should see the following INFO message in the logs:

INFO YarnClientSchedulerBackend: Stopped Waiting Until Spark Application Runs — waitForApplication Internal Method

waitForApplication(): UnitwaitForApplication waits until the current application is running (using Client.monitorApplication).

If the application has FINISHED, FAILED, or has been KILLED, a SparkException is thrown with the following message:

Yarn application has already ended! It might have been killed or unable to launch application master.You should see the following INFO message in the logs for RUNNING state:

INFO YarnClientSchedulerBackend: Application [appId] has started running.|

Note

|

waitForApplication is used when YarnClientSchedulerBackend is started.

|

asyncMonitorApplication

asyncMonitorApplication(): MonitorThreadasyncMonitorApplication internal method creates a separate daemon MonitorThread thread called "Yarn application state monitor".

|

Note

|

asyncMonitorApplication does not start the daemon thread.

|

MonitorThread

MonitorThread internal class is to monitor a Spark application submitted to a YARN cluster in client deploy mode.

When started, MonitorThread requests Client> to monitor a Spark application (with logApplicationReport disabled).

|

Note

|

Client.monitorApplication is a blocking operation and hence it is wrapped in MonitorThread to be executed on a separate thread.

|

When the call to Client.monitorApplication has finished, it is assumed that the application has exited. You should see the following ERROR message in the logs:

ERROR Yarn application has already exited with state [state]!That leads to stopping the current SparkContext (using SparkContext.stop).